The Genesis Mission and the Rise of Sovereign AI

The Brief: Genesis is an inter-generational message of hope for the US public, with national security as the immediate beneficiary.

The Situation: The White House Launches the Genesis Mission EO

On November 24 the White House launched The Genesis Mission via Executive Order, which might mark the beginning of the first sovereign scientific AI system.

The focus of the Genesis Mission is acceleration and application of transformative scientific discovery on pressing national challenges.

This Brief breaks down a uniquely consequential AI Executive Order.

AI rests on three inputs: data, compute and algorithms. This plan integrates the first two: federal data and federal infrastructure. This is the part of the stack big tech cannot influence. By extending conditional access to this otherwise inaccessible resource, the US government gains what it has been missing: control of frontier (scientific) models. By prescribing national priority domains, Genesis pre-commits scientific AI to state-defined use-cases rather than lab-defined ones.

With one program, the US government makes a big swing to reclaim control over the direction of AI from the labs.

The idea is not unique. The UK launched AI for Science one day earlier, on November 23. Scope and ambition, however, vastly differ. Where the UK targets 2030 as completion for data collection across UKRI-owned facilities, labs, and institutes; the Genesis Mission expects the first data plan at 120 days, with operationalisation of the first national priority following at day 270.

The White House wants Genesis to double the productivity of US research within a decade. Why does this programme matter far beyond scientific research?

The Red Line: Policy Analysis of the Genesis Mission

The White House is taking control of the security standards and diffusion pathways of scientific AI.

We’ll follow the implementation timeline to break down the EO highlights.

At 60 days: redirection of diffusion priorities

“Within 60 days of the date of this order, the Secretary shall identify and submit to the APST a detailed list of at least 20 science and technology challenges of national importance that the Secretary assesses to have potential to be addressed through the Mission and that span priority domains consistent with National Science and Technology Memorandum 2 of September 23, 2025, including:

(i) advanced manufacturing; (ii) biotechnology; (iii) critical materials; (iv) nuclear fission and fusion energy; (v) quantum information science; and (vi) semiconductors and microelectronics.”

Why this matters:

These challenges signal what the US government expects scientific AI to do before frontier models become general-purpose.

It will shape what foundation models are fine-tuned for, which sectors get priority access, and which industries get first-mover advantage.

This list is a strategic complement to US semiconductor controls and military supply chain resilience. By placing critical materials, nuclear, fusion and advanced manufacturing at the centre, the US is aligning AI with the domains that determine industrial and military advantage. We wrote about the imperative for the US to reduce foreign dependency in the China rare earth brief.

For the AI safety community, Genesis could be a window to find common ground with the administration on narrow and scientific AI. Performance of domain-specific scientific foundation models could anchor these discussions. If narrow AI exceeds expectations, the effect on public support for racing to AGI is an unknown.

At 90 days: public-private partnerships announced

“Within 90 days of the date of this order, the Secretary shall identify Federal computing, storage, and networking resources available to support the Mission, including both DOE on-premises and cloud-based high-performance computing systems, and resources available through industry partners.”

Why this matters:

This formalises a closer Silicon Valley–Washington collaboration.

The platform will be closed-loop. It’s in or out.

The US government will control security requirements.

This is a giant sandbox to experiment with AI compliance (security, privacy, export-control) and get first-hand information on trade-offs.

“The Secretary shall take necessary steps to ensure that the Platform is operated in a manner that meets security requirements consistent with its national security and competitiveness mission, including applicable classification, supply chain security, and Federal cybersecurity standards and best practices.”

The collaborator list matters because these firms are volunteering to operate under federal security conditions. This compliance corridor could act as a flywheel for wider adoption of federal standards.

Albemarle, AMD, Amazon Web Services, Anthropic, Applied Materials, Atomic Canyon, AVEVA, Cerebras, Chemspeed, Cisco, Collins Aerospace, ComEd, Cornelis Networks, Critical Materials Recycling, Dell Technologies, Emerald Cloud Lab, EPRI, Esri, FutureHouse, GE Aerospace, Google, HPE, Hugging Face, IBM, ISO New England, Kitware, LILA, Micron, Microsoft, MP Materials, New York Creates, Niron Magnetics, Nokia, NVIDIA, Nusano, OLI Systems, OpenAI for Government, Phoenix Tailings, PMT, Critical Metals, Qubit, Quantinuum, RadiaSoft, Ramaco, RTX, Sambanova, Scale AI, Semiconductor Industry Association, Siemens, Synopsys, TdVib, Tennessee Valley, Authority and xLight.

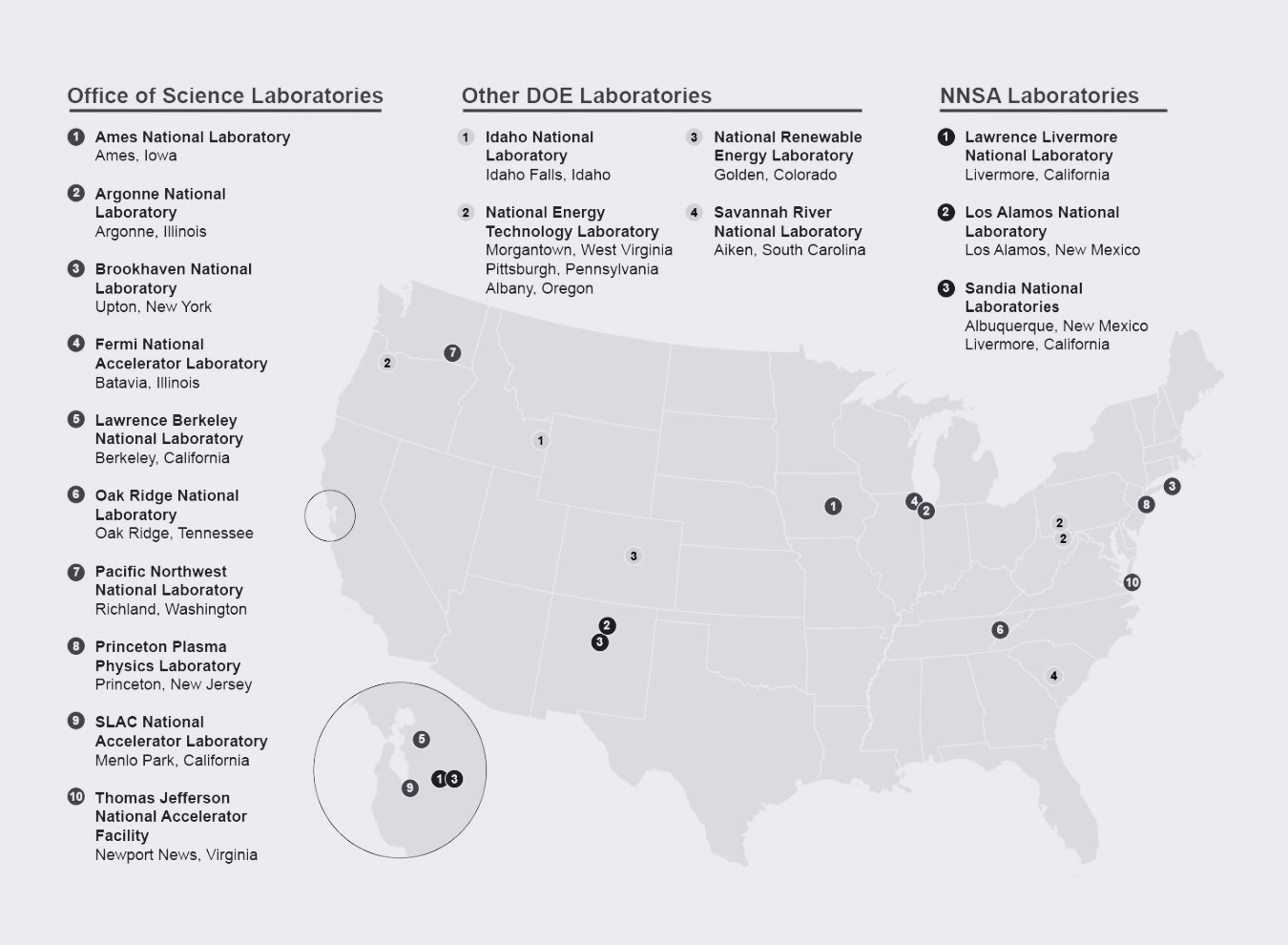

The Department of Energy has unified 17 national laboratories—over 40,000 scientists, engineers and technical staff—into a single scientific AI programme. Combined with partners this amounts to a monumental effort.

At 120 days: initial data inventory complete

“Within 120 days of the date of this order, the Secretary shall:

(i) identify a set of initial data and model assets for use in the Mission, including digitization, standardization, metadata, and provenance tracking; and

(ii) develop a plan, with appropriate risk-based cybersecurity measures, for incorporating datasets from federally funded research, other agencies, academic institutions, and approved private-sector partners, as appropriate.”

Why this matters:

This includes unprecedented access to federal scientific datasets, such as those managed by NASA, the National Institutes of Health and other government science agencies.

Department of Energy supercomputers (Frontier, Aurora) already have capacity for trillion-parameter training runs on exascale hardware. For most labs, this level of compute will remain out of reach.

At 240 days: closing the robotics gap

“Within 240 days of the date of this order, the Secretary shall review capabilities across the DOE national laboratories and other participating Federal research facilities for robotic laboratories and production facilities with the ability to engage in AI-directed experimentation and manufacturing, including automated and AI-augmented workflows and the related technical and operational standards needed.”

Why this matters:

One of the unique contributions of Genesis is the compressed innovation to application timeline.

China’s advantage in robotics has second-order effects on cost curves across manufacturing, energy, drones and defence production. Note that addressing China’s lead in physical automation is now a national-security priority.

At 270 days: demonstrated platform operating capability on one national priority area

“Within 270 days of the date of this order, the Secretary shall, consistent with applicable law and subject to available appropriations, seek to demonstrate an initial operating capability of the Platform for at least one of the national science and technology challenges identified pursuant to section 4 of this order.”

Why this matters:

Next to national priority, this executive order goes to the heart of public sentiment. There is a reason this project is public: the whole point is that the public knows it exists.

Public confidence in AI remains low. Earlier this year Pew Research found that less than two in ten US adults believes that AI will have a positive effect on the US over the next 20 years.

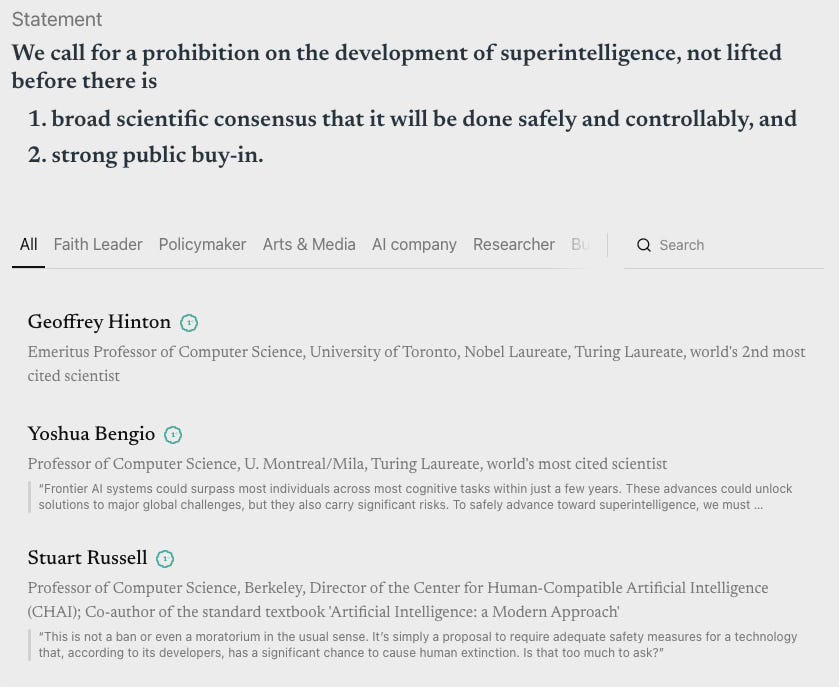

In addition, Stop AI initiatives make public buy-in a pre-requisite.

A visible demonstration is designed to shift sentiment. The 270-day timeline de-risks anti-AI ballots before the midterm elections in November.

“In this pivotal moment, the challenges we face require a historic national effort, comparable in urgency and ambition to the Manhattan Project that was instrumental to our victory in World War II and was a critical basis for the foundation of the Department of Energy and its national laboratories.”

If it was a true Manhattan project it would not be public. Rather than the Manhattan project, Genesis more closely resembles the Apollo playbook: a public mission intended to galvanise American spirit. Not to foster fear, but to provide hope.

The Signal: Public Perception

The White House is repositioning AI as a national mission rather than a source of existential risk. What to watch: whether Genesis shifts public perception of AI’s net benefit to the US. Will success of narrow AI undermine or strengthen public support for AGI? Expect the argument: “if narrow works this well, why stop?”

The government is making a significant step to shift (scientific) AI control away from the frontier labs. What to watch: whether Genesis models close the gap with commercial frontier systems. Is this a first step to sovereign AI?

The US and UK scientific AI programs are likely to be replicated by other major governments in quick succession. The US remains unique in facing a domestic power struggle with its frontier AI labs. Genesis’ mid-term contributions to national security priorities fit within a wider trend: both the US and UK AI safety labs are reorienting their strategies towards national security.

Wow, sovereign AI insight! My braim's buzzing like a GPU!