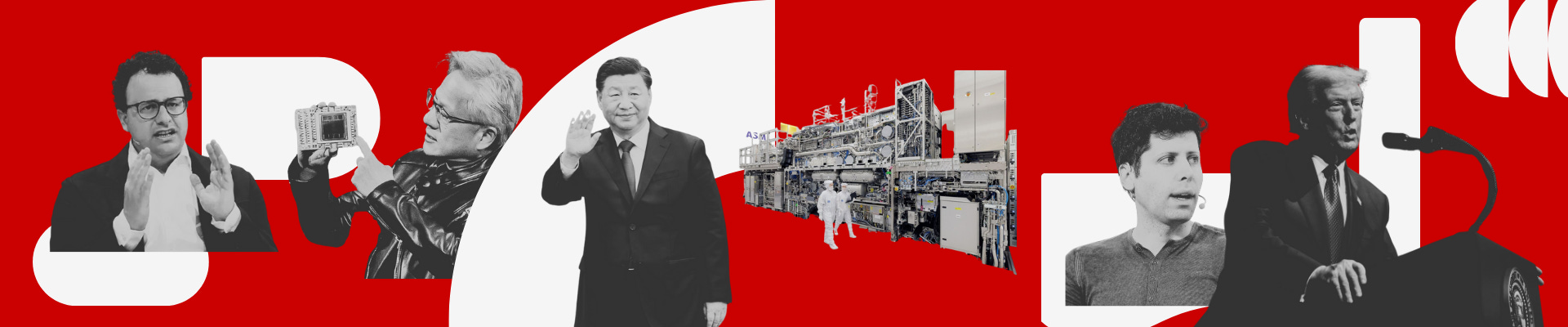

Introducing Red Line

A new approach to frontier risk and foresight

We need better frontier risk and foresight: this is why Red Line exists

Artificial intelligence will define the next century. The question is how we will decide along the way.

Achieving artificial general intelligence (AGI) will, counter-intuitively, mark a beginning rather than an end. Much of what we take for granted today will not serve us then. The future is not a uniform experience. How far will early adopters pull ahead, and how, or when, will others catch up?

Red Line’s mission is simple: bring better futures forward

Red Line stands for

independent;

public; and

multi-disciplinary risk analysis.

Risk is subjective, therefore analysis must be independent

Independence matters because risk carries direction. It is never neutral. Risk to what, and to whom? A threat to one actor may be a victory lap to another. By making assumptions explicit, Red Line lays out the full board so that decisions can be taken in full view of their consequences. Those who support this work invest in clarity: a public good that would not otherwise exist.

Red Line applies this independence in two ways:

through The Brief, a weekly newsletter carrying context for the most consequential moments in AI (our first public programme), and;

through bespoke collaborations with governments and companies to translate foresight into consequential policy and strategy.

The default of our work is public

Wherever possible, insights from private advisory engagements are redacted and released once confidentiality allows. Each analysis should ultimately advance understanding of the field. Some of our work is necessarily sensitive. High-trust advisory engagements allow institutions to apply the same disciplined reasoning in private that we model in public. Over time, the lessons from this work feed back into the public record, strengthening the field as a whole.

A collective, cross-disciplinary approach will yield better outcomes, faster

Every person on this planet will be exposed to AI risk within this decade. Everyone deserves access to the knowledge needed to make choices that bring a better future forward. As with any transformative technology, the trajectory of artificial intelligence will ultimately be shaped by countless overlapping decisions, both large and small. If you are reading this, know that yours still matters.

True understanding of AI emerges where disciplines meet. Philosophy must meet psychology, because value formation and human perception shape what we call “alignment”; economics must meet AI research, because incentives, not intentions, determine which systems are built and deployed. Red Line cultivates this exchange and makes it visible at the moments when decisions are made. AI risk is often treated as a single field. It is not. Understanding it requires the meeting of many. Over time, we aim to turn this cross-disciplinary reasoning into a field in its own right.

Beyond broadening access and understanding, Red Line works directly with decision-makers to develop situational awareness and bespoke operationalisation of risk. This includes scenario analysis, war-gaming, mechanism teardowns, policy design and coalition building. Work with us.

Together with our community, we will make steady progress on the questions that cannot remain unanswered. What does it mean to win the AI race? Which fork-in-the-road moments lie ahead, and how will we recognise them? If today’s institutions become obsolete, what will replace them, and what will that mean for global politics?

We begin from three assumptions about how AI changes risk

AI is a dual-use, general-purpose technology that will yield defining benefits.

First, AI is a dual-use technology

In security studies, the offence–defence balance describes whether technological competition tends to spiral into conflict or stabilise through deterrence. This dynamic is known as the security dilemma. The dilemma’s intensity depends on two factors: whether offensive and defensive capabilities can be clearly distinguished, and which side appears to hold the advantage.

The same logic applies to artificial intelligence. In AI safety, defence must succeed every time, while offence needs to succeed only once. As open-source models approach parity with closed counterparts on sensitive benchmarks (including those related to chemical, biological, radiological and nuclear (CBRN) risks) perceptions of advantage may shift faster than the underlying capabilities themselves. And in geopolitics, perception often drives escalation before the technical facts catch up.

That perception, who believes they hold the upper hand, drives the pace of the race.

Second, AI behaves as a system

Systems are integrated wholes which cannot be reduced to their individual parts. Systems thinking therefore focuses on relationships, namely the patterns of interdependence and feedback that give rise to complex outcomes.

When state-level legislation in the United States stalls, or when a new transformer architecture is released, these are not discrete events. They are signals within a tightly coupled system. As a general-purpose technology, artificial intelligence exerts effects that are both wide and deep, propagating through economic, political, and social domains.

In a world where AI can already write parts of its own code, systems thinking keeps analysis dynamic, broadens the horizon of foresight, and helps identify unanticipated risks before they emerge.

If an important node changes, the wider risk calculation changes.

Third, AI will yield defining benefits—but not evenly

These benefits extend beyond security into economics, and they can emerge suddenly. Relative-gains theory captures this dynamic: advantage lies not in absolute progress, but in the widening gap between actors. Because frontier capability scales exponentially, a single architecture breakthrough, dataset leak, or hardware supply deal can redraw competitive lines in months, not decades. In this sense, AI is compressing timeframes of competition.

Economic defining benefits include: the ability to generate, test, and deploy new ideas faster than others; a nation hosting frontier labs or large-scale model training infrastructure captures disproportionate value from global use; and control over model APIs, chips, and data standards allows states to shape who can innovate and at what cost.

Security defining benefits include: compressing the OODA loop (observe–orient–decide–act) in crisis and combat; sovereign compute, data, and model-training capacity insulates a nation from export controls or supply disruptions; and a faster-growing offensive balance, enhancing the speed, precision, and reach of attack relative to defence (from cyber operations and drone swarms to information manipulation and automated targeting).

Taken together, they amount to a structural transformation in the accumulation of power. First, artificial intelligence generates cross-domain spillovers. Gains in capability can translate simultaneously into economic and military advantage; technological progress in one arena reinforces power in the other. Second, these effects are characterised by temporal compression: benefits can materialise within a single innovation cycle, allowing states or firms to leap ahead before competitors can adjust. Third, these dynamics produce cumulative asymmetry, where early advantage compounds through positive feedback loops.

Today, there is a temporal lag between what AI can do and what it is deployed to do. Anthropic’s Economic Index and METR’s doubling graph show that tasks where AI performs at human level are accelerating along an exponential curve. No country yet sits fully on that curve. The gap between capability and deployment creates pockets of extraordinary productivity alongside areas of inertia, producing volatility in labour markets and strategic planning alike. This temporal lag is what makes the AI frontier jagged: progress unfolds discontinuously, with uneven access, uneven diffusion, and uneven benefit.

Because power is being redrafted across both security and economic dimensions, they must be understood in context.

Together, these three perspectives — the offence–defence balance from security studies, systems thinking from frontier-AI research and relative gains from international relations — form a cross-disciplinary lens for understanding AI risk in real-time.

The Brief, our first programme, delivers a weekly AI-risk brief that applies this method in practice.

The Brief is a deep-dive into a topic not only relevant today, but structurally relevant to the direction of AI for months to come. Read the first issue.

Nothing substitutes true understanding. Decision-makers cannot lean on the crutch of consensus. It does not exist. Sense-making is real time, complex, and requires work.

In The Brief we curate what matters, revealing patterns behind events: a shift in model-evaluation results signalling an offence–defence tilt, system ripples flowing from new technological breakthrough, or capital-allocation trends hinting at a new distribution of gains.

The only difference between red lines and the frontier is a decision. Foresight is not prediction but preparation. Red Line exists to make that preparation possible.

Red Line was founded in 2025 by Arwen Smit to bring foresight to the frontier of AI.

Smit has spent over a decade examining the unintended consequences of emerging technologies. She holds degrees in business from the Stockholm School of Economics and the Rotterdam School of Management, and is now reading International Relations at Cambridge, researching the security implications of open-source AI.